The Witcher 3 Next Gen Update: three months on, I’m still not convinced

CDPR is future-proofing its flagship games, but at a cost to today’s players.

^ Stay tuned for our full video appraisal of The Witcher 3's current state, full of glorious ultrawide footage!

The best bit of PC gaming is having the speed and power to be able to whack everything on Ultra and marvel as your rig effortlessly spits out a silky-smooth rendition of whatever game it is, with graphical features activated that would make the latest consoles wave a little white flag and melt into a puddle of liquid convenience.

The worst bit is when you can’t whack everything on ultra, and so have to Fiddle: endlessly tweaking a bewildering array of switches and sliders in order to find the perfect balance between performance and visual fidelity. Incessantly calibrating a piece of software with all the complexity of one that monitors the status of a nuclear reactor, not just for your specific hardware configuration but also for your own tolerances as a human being. Some people don’t mind a bit of screen tearing if it unlocks some performance headroom. Others, like me, abhor it: it’s synonymous with “unplayable” as far as I’m concerned. Some people can take or leave raytracing. Me? If it’s there, I want it turned on, otherwise I’ll be wincing every time a screen space reflection rudely disappears when I dare to tilt the camera.

Now, this is all par for the course with high-end PC gaming: you pays a lot of money, you makes a lot of choices. But it tends to only be an annoyance with the latest games, and particularly graphically intensive ones at that. Older games are usually the ones you can crank to your heart’s content. The Witcher 3, for example, which has never been considered a slouch in the graphics department, used to happily run on my PC with everything on max, in 4K ultrawide, without dropping a single frame. And it looked excellent while doing so, because CDPR have a habit of building their games for tomorrow’s hardware.

Well, as far as 2015 action RPG The Witcher 3 goes, tomorrow came to an end last December. Unfortunately, for those of us trying to enjoy 2022 action RPG The Witcher 3, tomorrow is a way off. The clock has been reset. It is no longer a comfortable “whack everything on Ultra+” game, but an “incessant fiddling until you come to a begrudging compromise” game. This is, frankly, distressing.

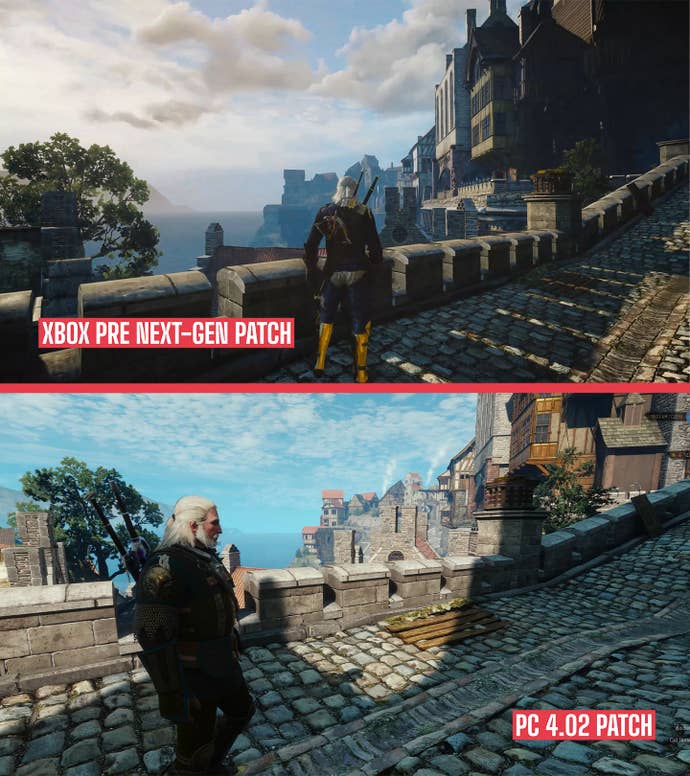

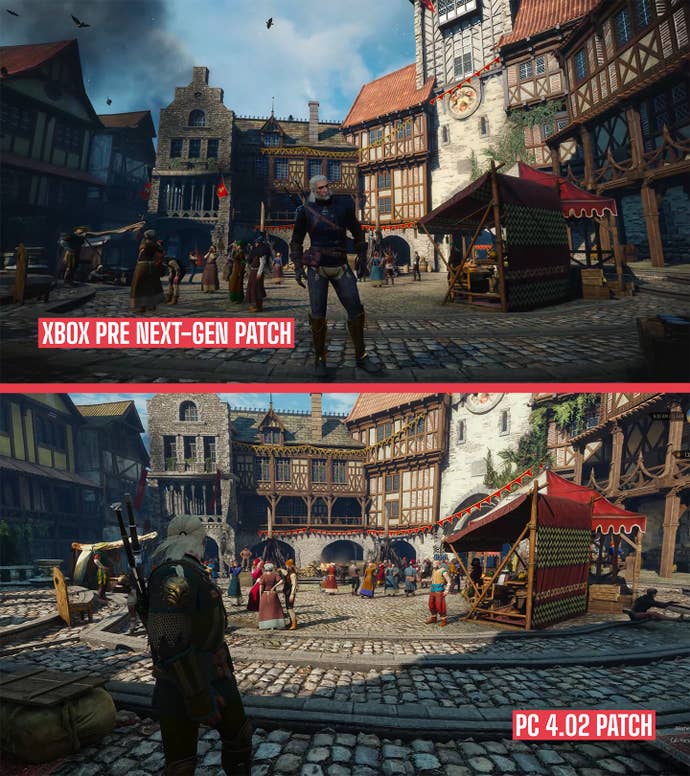

Let’s be clear: I’m not saying that the next gen updates don’t look good. It looks great. The Witcher 3 is a game full of gothic nooks, nobbly glass windows, muddy puddles, and huge bodies of water surrounded by medieval towers and other pointy bits. It benefits hugely from the implementation of raytracing. But activating this flagship RTX feature comes, of course, at a massive performance cost. On consoles, it tanks performance to unacceptable sub-30fps levels. On PC, back in December, the first rollout of the next gen update absolutely obliterated framerates across the board, prompting many user complaints and a significant number of players to roll the update back and restore the previous, perfectly adequate version.

Luckily the latest Witcher 3 patch, 4.02 at the time of writing, improves matters somewhat: a less intensive option has been added to the raytracing suite, rather ludicrously adding a performance mode to the quality mode, and the whole thing generally performs a lot better. For my money, I’ve found that it makes Witcher 3 with raytracing a viable prospect now, whereas before it seemed like we’d have to wait for 5000 series GPUs to get it running properly.

Which, as I’ve said, is just the nature of high-end PC gaming. If you’re running the latest graphically intensive games, you have to expect – even with the most bleeding-edge hardware on the market – to have to do some tweaking in order to hit the sweet spot between fidelity and performance on your particular hardware.

But The Witcher 3 isn’t one of the latest graphically intensive games. I played it on a launch PS4 almost a decade ago. And though I understand the merits of keeping everything under the hood fresh and up to date, patching in support for the latest GPU innovations, ensuring that the game will still be a looker in 2034: I can’t help but wonder if the gains are really worth it. If the enhanced fidelity on offer is quite enhanced enough to justify this huge reset of the clock that starts ticking when a new system-busting game is released: a countdown to when the median PC spec catches up to its ambitions, and everyone can enjoy it at its best.

Are the result worth it, in the end? In my heart of hearts, I don’t think they are. And I don’t want to be misunderstood here: I think real-time raytracing is one of the most exciting features to hit the GPU space in years. It’s been something of a holy grail in video game graphics for decades – I remember reading about early Quake demos of the tech in PC Gamer in the early 2000s – and to be living at a time where we are on the cusp of it being the standard in how game engines handle shadowing and reflections is genuinely very cool. It could fundamentally change how game worlds are designed. It could conceivably lead to new gameplay experiences that take into account the very nature of how light behaves.

But in the case of The Witcher 3, it feels like we had something that looked beautiful and ran beautifully, and now it’s been meddled with. After a few days of messing around with the settings on my machine, I think I’ve got it to a spot where I can make peace with how smoothly it runs vs how nice it looks. In truth, I think the best and most stress-free compromise is simply to play it on the Xbox Series X in performance mode. Which, in terms of visual bells and whistles, isn’t far off what we had with the Xbox One X performance mode on the previous version when running on this hardware. That’s the mad thing: The Witcher 3 was so prescient in its future-proofing that the last gen version pulled off a gorgeous 4K60 presentation on hardware that wasn’t even conceived of when it launched.

When the next gen update was announced, I wondered out loud if The Witcher 3 even needed one. A lot of people thought it was a stupid question. But over three months since the debut of the new and improved game, I’m still not convinced. Ask me in 2033, I guess.

.jpg?width=291&height=164&fit=crop&quality=80&format=jpg&auto=webp)